Automating software tests: One project’s journey

In this post, ObjectStyle’s test engineer Oksana Koyro shares the story of how her software project went from having manual tests to test automation. We hope this information will be useful to anyone who is getting started with automated testing.

Q: Oksana, could you tell us a bit about this project and what was going on when you joined?

I joined this project as a manual tester about three and a half years ago. Initially, it was a pretty old piece of legacy software that was being modernized at the time. The software is, basically, a small business app that also has a client-facing part. But even though it’s for an SMB, it’s in the legal industry, so quality is really important to them and the software should work like a clock.

When we got started on that project, it was a bumpy road. The previous vendor didn’t leave any test documentation behind. This was particularly difficult for me as a tester, because I had almost no way of identifying the software’s expected behavior. I was piecing together bits of information scattered across JIRA tasks. Another downside was that those tickets did not describe the product or the way it should behave from the end-user’s point of view. A ticket could say something like “Fix cache in …,” and I had no idea where to start looking to see this on the front-end.

Q: When and why did you decide to start automating tests?

As the project progressed and we got to know the software on a deeper level, we figured we could do better planning, give more precise estimates, and release on a cadence. Also, to make the software better maintainable, the programmers started writing unit tests. We set up a continuous integration (CI) pipeline.

Around the same time, it became obvious that certain tests, especially regression tests, could be automated. Not only was this going to save us time and money in the long run, but it was also a great way for me to stay motivated. It’s common knowledge that eventually, manual testers get tired of filling out the same forms and clicking the same buttons, especially if you have a stable product.

While I was a manual tester at the time, it was decided that I would learn automation, because this is what many companies do – they teach their manual testers new skills. The obvious advantage is that the manual tester already knows the product and is familiar with the test cases they’re going to automate.

Q: What were your first steps?

I took a course in Java programming for testers and played with Selenium IDE to write my first tests. I don’t remember it very well, because it was quite a long time ago. All in all, Selenium IDE is easy to get started with and has a smooth learning curve. It has robots that record the steps you take in the app, including the locators that point to your buttons/fields, etc. In theory, this sounds like an awesome thing, but in reality, robots can make mistakes and you need to debug things. Another limitation is that Selenium IDE is too simple and not really meant for maintaining a large number of tests in the long run.

As things got more complex, I started using the Selenide test automation library for Java. It’s an open-source library built by a software development company from Estonia. Selenide adds a powerful layer on top of Selenium that lets you write complex tests in a clean, easily-readable language. For a person like me with no previous experience writing Java code, this was a real life-saver. Basically, Selenide hides a lot of unnecessary boilerplate code so that you can use a much simpler syntax. Then you can leverage the power of Java and benefit from a simple interface for writing UI tests at the same time.

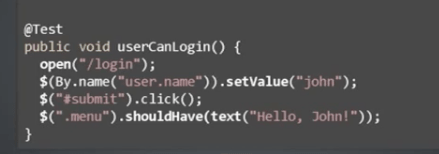

For instance, here’s a piece of Selenide code – it’s almost written in plain English, and you don’t have to be a programmer to understand what the test does:

Q: Where do you run the test scripts once you’ve written them?

All in all, the project is compiled in IntelliJ IDEA, so I start my tests there, too. We also use Teamcity for continuous delivery (CI) and deploy builds through Git for version control.

Every morning Teamcity checks for changes, and the automatic tests get started if any changes have been detected. Then you can see if any tests have failed and you can act upon it. If a test fails, you can always investigate the cause by looking at Teamcity logs and screenshots – it takes a snapshot of the browser the moment a test fails.

Recently, we’ve also started using Testcontainers to make sure our test results are not skewed by cache, browser cookies, or other “subjective” factors. With Testcontainers, each test starts in a fresh browser instance in an insulated Docker container, which eliminates any noise from the outside. And you get a video recording of each test session, or just the sessions where tests failed. I had an automated test that was failing all the time, but everything was fine when I checked in manually. So it was a false positive. Once we got Testcontainers in place, the test stopped failing.

Q: Do your tests cover the entire functionality?

We have quite an old product with lots of features built a long time ago, and some functionality is used by 1.5 people every 100 years on average (kidding). So, we decided it didn’t make sense to write automated tests for all of them.

In general, I write scripts for testing old, established functionality (that is, for regression tests). Sometimes, I could cover a piece of new functionality with an auto-test if I know that the feature is here to stay: for example, we’re adding a new tab and I know that this tab will not go anywhere any time soon. So, I can include it into my scripts, if necessary.

Q: What are the benefits of automation? Do you feel like it’s already saving you time and effort?

Absolutely. Automated test that start every morning now take about 1 to 1.5 hours to complete, while previously, it could have taken half a day or even the entire working day to go over the same test cases manually.

Besides, since we introduced CI to this project, the whole release process is very different now compared to how we used to do things some one and a half years ago. There’s now more communication between us and the client. We now have a dedicated product owner, and we’re able to understand the requirements much better. Hopefully, that means we’re headed in the right direction. 🙂

Related Blogs

Getting Started with Software Testing and QA (CEO’s Cheat Sheet)

LEARN MORE